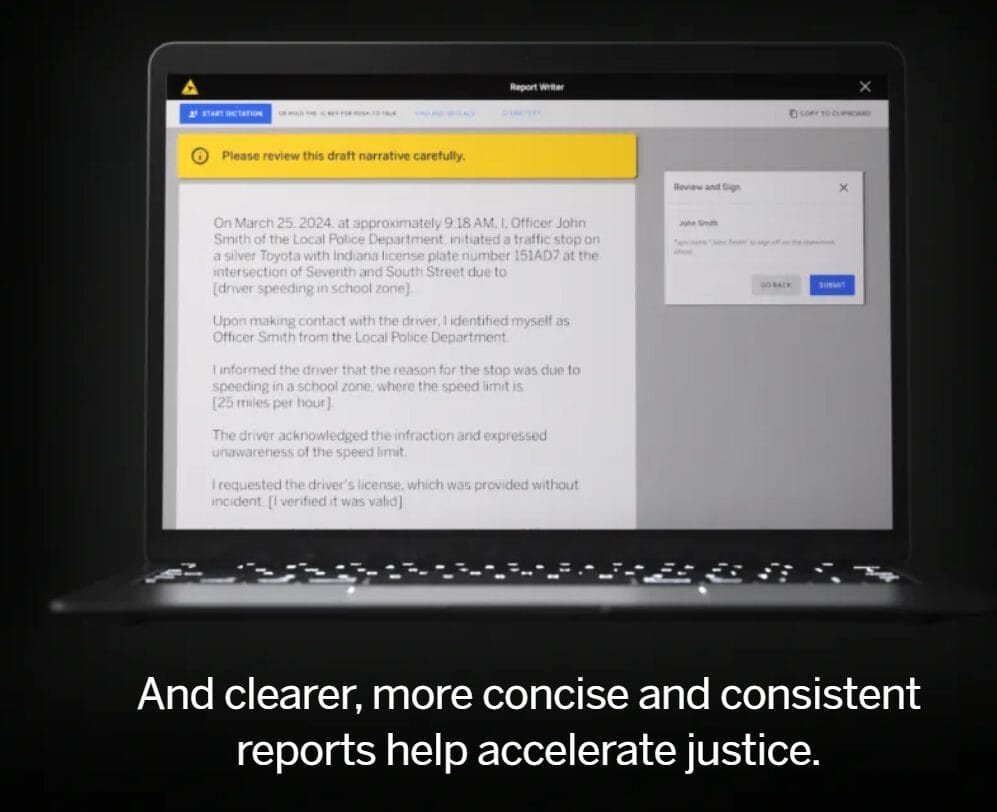

In April, public service contractor Axon debuted what they call “Draft One,” which Axon describes as a “revolutionary new software product that drafts high-quality police report narratives in seconds based on auto-transcribed body-worn camera audio.”

“Draft One leverages Generative Artificial Intelligence (AI) and includes a range of critical safeguards, requiring every report to be reviewed and approved by a human officer, ensuring accuracy and accountability of the information before reports are submitted,” the company said in a press release.

The company added: “Agency trials have resulted in roughly one hour of time saved per day on paperwork. For every eight officers who use Draft One during their day, that translates to an extra eight-hour shift or more. When officers can spend more time connecting with the community and taking care of themselves both physically and mentally, they can make better decisions that lead to more successful de-escalated outcomes.”

Draft One can also curate a report from multiple bodycam clips along with other third-reports not previously logged in the Axon system.

Draft One includes a range of strict safeguards and requires that humans make the decisions in key moments:

- Officer required to review and sign-off: Once the report narrative has been edited and key information has been added, officers are required to sign-off on the report’s accuracy before submitting for the next round of human review.

- Adheres to the audio data: Report narratives are drafted strictly from the audio transcript from the body-worn camera recording. Axon calibrated the underlying model for Draft One to prevent speculation or embellishments.

- Controls to ensure proofreading: Each draft includes placeholders that officers are required to edit, by either adding more information or removing the placeholder.

- Report drafts are restricted to minor incidents and charges: The default experience limits report drafts to minor incident types and charge levels, specifically excluding incidents where arrests took place and for felonies. With this default, agencies can get started with Draft One and gain tremendous value in expediting report writing for the overwhelming majority of reports officers submit. This allows agencies the option to gain experience on low severity reports first, then expand to more severe reports once they gain experience in how to use the tool effectively. Agencies can set a policy determining which reports are eligible for Draft One utilization, and the tool then ensures enforcement of the agency policy.

Every single officer in the U.S. writes police reports, often every day and normally multiple times a day. As we’ve done with Draft One, harnessing the power of AI will prove to be one of the most impactful technological advancements of our time to help scale police work and revolutionize the way public safety operates.

Axon CEO and Founder Rick Smith, said

Draft One is currently being put to use by some police forces throughout the U.S.

The Associated Press reported in August how some forces are raving about it, being able to generate reports in seconds that would have normally taken significantly longer. The AP wrote:

A body camera captured every word and bark uttered as police Sgt. Matt Gilmore and his K-9 dog, Gunner, searched for a group of suspects for nearly an hour.

Normally, the Oklahoma City police sergeant would grab his laptop and spend another 30 to 45 minutes writing up a report about the search. But this time he had artificial intelligence write the first draft.

Pulling from all the sounds and radio chatter picked up by the microphone attached to Gilmore’s body camera, the AI tool churned out a report in eight seconds.

“It was a better report than I could have ever written, and it was 100% accurate. It flowed better,” Gilmore said. It even documented a fact he didn’t remember hearing — another officer’s mention of the color of the car the suspects ran from.

Built with the same technology as ChatGPT and sold by Axon, best known for developing the Taser and as the dominant U.S. supplier of body cameras, it could become what Gilmore describes as another “game changer” for police work.

[… In] Lafayette, Indiana, where Police Chief Scott Galloway told the AP that all of his officers can use Draft One on any kind of case and it’s been “incredibly popular” since the pilot began earlier this year.Or in Fort Collins, Colorado, where police Sgt. Robert Younger said officers are free to use it on any type of report, though they discovered it doesn’t work well on patrols of the city’s downtown bar district because of an “overwhelming amount of noise.”

Naturally, however, there is definite concern from some as to how accurate and reliable the technology is, and how much effort officers will put into making sure the report is as accurate as it needs to be.

Oklahoma City community activist aurelius francisco [sic], a co-founder of the Foundation for Liberating Minds in Oklahoma City, says this tech is “deeply troubling.”

The fact that the technology is being used by the same company that provides Tasers to the department is alarming enough.

[These automated reports will] ease the police’s ability to harass, surveil and inflict violence on community members. While making the cop’s job easier, it makes Black and brown people’s lives harder.

The Electronic Frontier Foundation (EFF) also listed a number of potential concerns with the technology in May. They warn of things such as the “algorithms’ ability to accurately process and distinguish between the wide range of languages, dialects, vernacular, idioms and slang people use.” The group also worries about the accuracy of the reports from shaky cameras in motion and the AI’s ability to accurately transcribe what occurred.

Other things include the technology having “the power to obscure human agency. Police officers who deliberately speak with mistruths or exaggerations to shape the narrative available in body camera footage now have even more of a veneer of plausible deniability with AI-generated police reports,” EFF notes.

And should the AI make an error then will police officers honestly correct the mistakes?

Moreover, if the AI-generated report is incorrect, can we trust police will contradict that version of events if it’s in their interest to maintain inaccuracies? On the flip side, might AI report writing go the way of AI-enhanced body cameras? In other words, if the report consistently produces a narrative from audio that police do not like, will they edit it, scrap it, or discontinue using the software altogether?

EFF suggested

AUTHOR COMMENTARY

This is just ridiculous. Look, I understand writing up all these reports can be very tedious and tiresome – which is a big fault of bureaucratic bungling demanding that police do an entire class book report for a single simple case in many instances – but we also know that it is in our nature, not just cops, to be complacent and lazy.

Furthermore, with this AI integration, on top of general malaise, we know that there are many bad attitude and criminal coppers in this country, who already manipulate reports, frame identities and lie and stage events, and so we can expect even greater manipulation and further reduced integrity.

We also know how often AI algorithms can be incredibly and laughably inaccurate.

Proverbs 12:24 The hand of the diligent shall bear rule: but the slothful shall be under tribute.

Proverbs 15:19 The way of the slothful man is as an hedge of thorns: but the way of the righteous is made plain.

This is a mess waiting to happen, and I have no doubt that we will eventually hear of stories where people were wrongfully charged and jailed because the AI “hallucinated” and the officer couldn’t be bothered to check it.

[7] Who goeth a warfare any time at his own charges? who planteth a vineyard, and eateth not of the fruit thereof? or who feedeth a flock, and eateth not of the milk of the flock? [8] Say I these things as a man? or saith not the law the same also? [9] For it is written in the law of Moses, Thou shalt not muzzle the mouth of the ox that treadeth out the corn. Doth God take care for oxen? [10] Or saith he it altogether for our sakes? For our sakes, no doubt, this is written: that he that ploweth should plow in hope; and that he that thresheth in hope should be partaker of his hope. (1 Corinthians 9:7-10).

The WinePress needs your support! If God has laid it on your heart to want to contribute, please prayerfully consider donating to this ministry. If you cannot gift a monetary donation, then please donate your fervent prayers to keep this ministry going! Thank you and may God bless you.

If society in general weren’t so lazy and dumbed down, AI wouldn’t appeal to them.

True enough.